Graphs’ Counterfactual Explainability Landscape: current state and frontiers

Invited talk XAI.it 2023 - 4th Italian Workshop on Explainable Artificial Intelligence | co-located with AIxIA 2023 on 8, Nov. 2023

Organisers

Prof. Giovanni Stilo

Table of contents

Abstract

Counterfactual Explanation (CE) techniques have garnered attention as a means to provide insights to the users engaging with AI systems. While extensively researched in domains such as medical imaging and autonomous vehicles, Graph Counterfactual Explanation (GCE) methods have been comparatively under-explored. GCEs generate a new graph akin to the original one, having a different outcome grounded on the underlying predictive model. During this presentation, we take you on a journey across the GCE, commencing with foundational concepts. Next, we introduce the categorization of explainers, emphasize key tools essential for initiating work in this field, explore the latest advancements, offer a visual comparison of various generative methods, and conclude with our final remarks.

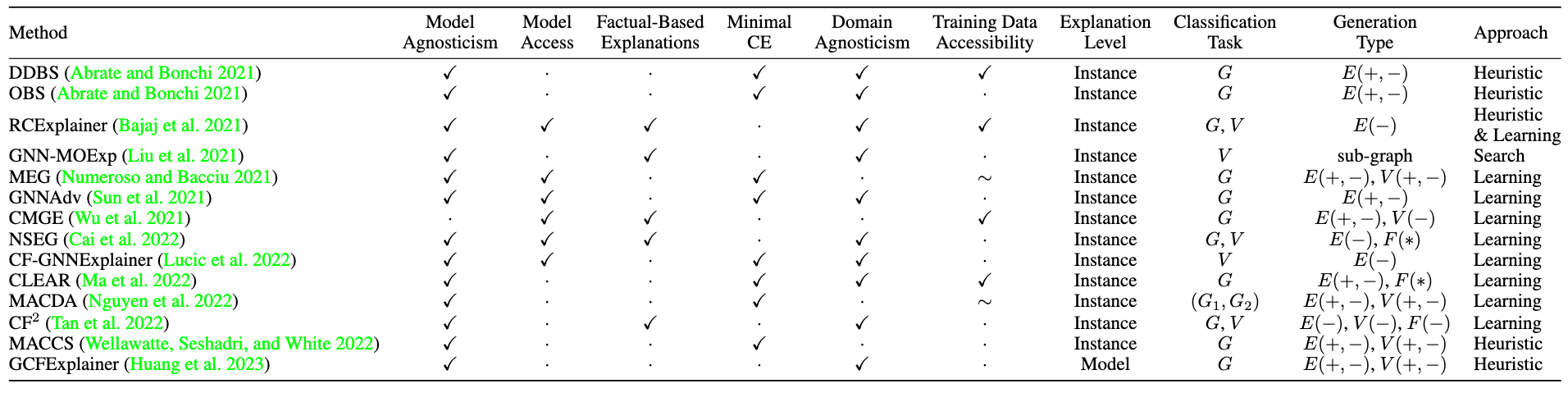

Table 1: The most important references of the research topic.

The talk is partially based on on ACM Computing Survey: A Survey on Graph Counterfactual Explanations: Definitions, Methods, Evaluation, and Research Challenges Use the following BibTeX to cite our paper.

@article{10.1145/3618105,

author = {Prado-Romero, Mario Alfonso and Prenkaj, Bardh and Stilo, Giovanni and Giannotti, Fosca},

title = {A Survey on Graph Counterfactual Explanations: Definitions, Methods, Evaluation, and Research Challenges},

year = {2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

issn = {0360-0300},

url = {https://doi.org/10.1145/3618105},

doi = {10.1145/3618105},

journal = {ACM Computing Surveys},

month = {sep}

}Upcoming events at AAAI 2024

We are delighted to announce that our proposals for both a tutorial and a lab have been accepted for presentation at the Thirty-Eighth AAAI Conference on Artificial Intelligence (AAAI-24), scheduled to take place at the Vancouver Convention Centre in Vancouver, British Columbia, Canada, from February 20 to February 27, 2024.

Do not to miss our tutorial session titled:

- “Graphs Counterfactual Explainability: A Comprehensive Landscape” Website of the Tutorial

and the closely associated laboratory session named:

- “Digging into the Landscape of Graphs Counterfactual Explainability.” Website of the Laboratory

Giovanni Stilo

is a Computer Science and Data Science associate professor at the University of L’Aquila, where he leads the Master’s Degree in Data Science, and he is part of the Artificial Intelligence and Information Mining collective. He received his PhD in Computer Science in 2013, and in 2014, he was a visiting researcher at Yahoo! Labs in Barcelona. His research interests are related to machine learning, data mining, and artificial intelligence, with a special interest in (but not limited to) trustworthiness aspects such as Bias, Fairness, and Explainability. Specifically, he is the head of the GRETEL project devoted to empowering the research in the Graph Counterfactual Explainability field. He has co-organized a long series (2020-2023) of top-tier International events and Journal Special Issues focused on Bias and Fairness in Search and Recommendation. He serves on the editorial boards of IEEE, ACM, Springer, and Elsevier Journals such as TITS, TKDE, DMKD, AI, KAIS, and AIIM. He is responsible for New technologies for data collection, preparation, and analysis of the Territory Aperti project and coordinator of the activities on “Responsible Data Science and Training” of PNRR SoBigData.it project, and PI of the “FAIR-EDU: Promote FAIRness in EDUcation Institutions” project. During his academic growth, he devoted much of his attention to teaching and tutoring, where he worked on more than 30 different national and international theses (of B.Sc., M.Sc., and PhD levels). In more than ten years of academia, he provided university-level courses for ten different topics and grades in the scientific field of Computer and Data Science.

Bibliography

[1] Mario Alfonso Prado-Romero, Bardh Prenkaj, Giovanni Stilo, and Fosca Giannotti. A Survey on Graph Counterfactual Explanations: Definitions, Methods, Evaluation, and Research Challenges. ACM Comput. Surv. (September 2023). https://doi.org/10.1145/3618105

[2] Mario Alfonso Prado-Romero and Giovanni Stilo. 2022. GRETEL: Graph Counterfactual Explanation Evaluation Framework. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management (CIKM ‘22). Association for Computing Machinery, New York, NY, USA, 4389–4393. https://doi.org/10.1145/3511808.3557608

[3] Mario Alfonso Prado-Romero, Bardh Prenkaj, and Giovanni Stilo. 2023. Developing and Evaluating Graph Counterfactual Explanation with GRETEL. In Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining (WSDM ‘23). Association for Computing Machinery, New York, NY, USA, 1180–1183. https://doi.org/10.1145/3539597.3573026

[4] F. Scarselli, M. Gori, A. C. Tsoi, M. Hagenbuchner and G. Monfardini, The Graph Neural Network Model, in IEEE Transactions on Neural Networks, vol. 20, no. 1, pp. 61-80, Jan. 2009, https://doi.org/10.1109/TNN.2008.2005605

[5] Z. Wu, S. Pan, F. Chen, G. Long, C. Zhang and P. S. Yu, A Comprehensive Survey on Graph Neural Networks, in IEEE Transactions on Neural Networks and Learning Systems, vol. 32, no. 1, pp. 4-24, Jan. 2021, https://doi.org/10.1109/TNNLS.2020.2978386

[6] Q. Huang, M. Yamada, Y. Tian, D. Singh and Y. Chang, GraphLIME: Local Interpretable Model Explanations for Graph Neural Networks, in IEEE Transactions on Knowledge and Data Engineering, vol. 35, no. 7, pp. 6968-6972, 1 July 2023, https://doi.org/10.1109/TKDE.2022.3187455

[7] Y. Liu, C. Chen, Y. Liu, X. Zhang and S. Xie, Multi-objective Explanations of GNN Predictions, 2021 IEEE International Conference on Data Mining (ICDM), Auckland, New Zealand, 2021, pp. 409-418, https://doi.org/10.1109/ICDM51629.2021.00052

[8] Carlo Abrate and Francesco Bonchi. 2021. Counterfactual Graphs for Explainable Classification of Brain Networks. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining (KDD ‘21). Association for Computing Machinery, New York, NY, USA, 2495–2504. https://doi.org/10.1145/3447548.3467154

[9] Bajaj, M., Chu, L., Xue, Z., Pei, J., Wang, L., Lam, P.,and Zhang, Y. Robust counterfactual explanations on graph neural networks. Advances in neural information processing systems, 34.

[10] Wellawatte GP, Seshadri A, White AD. Model agnostic generation of counterfactual explanations for molecules. Chem Sci. 2022 Feb 16;13(13):3697-3705. https://doi.org/10.1039/d1sc05259d

[11] Cai, R., Zhu, Y., Chen, X., Fang, Y., Wu, M., Qiao,J., and Hao, Z. 2022. On the Probability of Necessity and Sufficiency of Explaining Graph Neural Networks: A Lower Bound Optimization Approach. arXiv preprint arXiv:2212.07056

[12] Ana Lucic, Maartje A. Ter Hoeve, Gabriele Tolomei, Maarten De Rijke, Fabrizio Silvestri, CF-GNNExplainer: Counterfactual Explanations for Graph Neural Networks, in Proceedings of The 25th International Conference on Artificial Intelligence and Statistics, PMLR 151:4499-4511, 2022.

[13] Ma, J.; Guo, R.; Mishra, S.; Zhang, A.; and Li, J., 2022. CLEAR: Generative Counterfactual Explanations on Graphs. Advances in neural information processing systems, 35, 25895–25907.

[14] T. M. Nguyen, T. P. Quinn, T. Nguyen and T. Tran, Explaining Black Box Drug Target Prediction Through Model Agnostic Counterfactual Samples, in IEEE/ACM Transactions on Computational Biology and Bioinformatics, vol. 20, no. 2, pp. 1020-1029, 1 March-April 2023, https://doi.org/10.1109/TCBB.2022.3190266

[15] Numeroso, Danilo and Bacciu, Davide, MEG: Generating Molecular Counterfactual Explanations for Deep Graph Networks, 2021 International Joint Conference on Neural Networks (IJCNN). https://doi.org/10.1109/IJCNN52387.2021.9534266

[16] Sun, Y., Valente, A., Liu, S., & Wang, D. (2021). Preserve, Promote, or Attack? GNN Explanation via Topology Perturbation. ArXiv. https://arxiv.org/abs/2103.13944

[17] Juntao Tan, Shijie Geng, Zuohui Fu, Yingqiang Ge, Shuyuan Xu, Yunqi Li, and Yongfeng Zhang. 2022. Learning and Evaluating Graph Neural Network Explanations based on Counterfactual and Factual Reasoning. In Proceedings of the ACM Web Conference 2022 (WWW ‘22).https://doi.org/10.1145/3485447.3511948

[18] Wu, H.; Chen, W.; Xu, S.; and Xu, B. 2021. Counterfactual Supporting Facts Extraction for Explainable Medical Record Based Diagnosis with Graph Network. In Proc. of the 2021 Conf. of the North American Chapter of the Assoc. for Comp. Linguistics: Human Lang. Techs., 1942–1955. https://aclanthology.org/2021.naacl-main.156/

[19] Zexi Huang, Mert Kosan, Sourav Medya, Sayan Ranu, and Ambuj Singh. 2023. Global Counterfactual Explainer for Graph Neural Networks. In Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining (WSDM ‘23). Association for Computing Machinery, New York, NY, USA, 141–149. https://doi.org/10.1145/3539597.3570376

Acknowledgement

“Digging into the Landscape of Graphs Counterfactual Explainability (Laboratory at AAAI 2024)” event was organised as part of the SoBigData.it project (Prot. IR0000013 - Call n. 3264 of 12/28/2021) initiatives aimed at training new users and communities in the usage of the research infrastructure (SoBigData.eu). SoBigData.it receives funding from European Union – NextGenerationEU – National Recovery and Resilience Plan (Piano Nazionale di Ripresa e Resilienza, PNRR) – Project: “SoBigData.it – Strengthening the Italian RI for Social Mining and Big Data Analytics” – Prot. IR0000013 – Avviso n. 3264 del 28/12/2021.